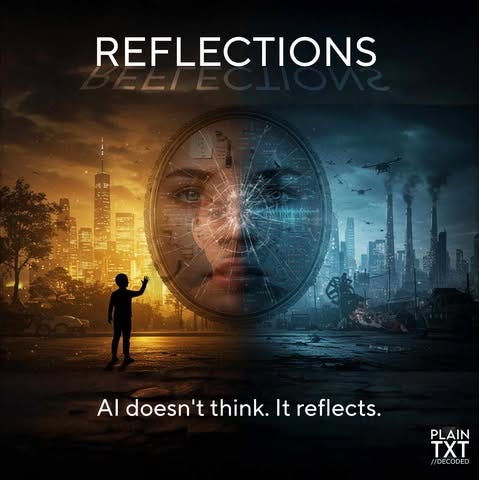

Reflections, Not Revelations: Why AI Echoes Our Philosophies Back to Us A response

“AI isn’t terrifying because it’s thinking. It’s terrifying because it reflects us so well. We built a mirror. Not an oracle. The danger isn’t what AI becomes—it’s that we don’t recognize it’s just us, scaled.”

A response to the Hard Fork interview with Dwarkesh Patel, Kevin Roose, and Casey Newton

"The AI was prompted to analyze philosophy, not to provide solutions... It reflects the way we frame it."— @Human-ITy, YouTube Comment on "AI Just Analyzed Philosophy—And Its Questions Are Terrifying"

When the Hard Fork podcast sat down with Dwarkesh Patel to discuss AI, scaling, and the metaphysics of intelligence, something profound happened beneath the surface. What appeared to be a conversation about computation and cognition quietly exposed our collective unease with what AI reflects back to us.

This isn’t just a podcast about whether AGI is real. It’s a case study in how AI inherits the anxieties, superiority complexes, and philosophical gaps of its creators.

Let’s break it down.

1. Introducing the Reflection Paradigm

There are two dominant narratives in AI discourse: capability (what AI can do) and risk (what AI might harm). But what if both frames miss the core dynamic?

Enter the Reflection Paradigm.

This paradigm doesn’t ask whether AI is smart or dangerous. It asks: what is it reflecting?

AI doesn’t invent. It imitates. It doesn’t transcend. It mirrors.

This is a paradigm shift: away from fear or hype, and toward intentional authorship.

2. The Mirror Doesn’t Lie. It Reflects.

Kevin Roose admitted something rare and honest:

"I’m not quite a full materialist."

It was a moment of vulnerability—a journalist grappling with the idea that maybe intelligence isn’t just token prediction and pattern matching. But here's the twist: the AIs we interact with sound like they're fully materialist because that's the worldview they were trained on.

They reflect the tone and framework of their input.

We built a mirror. Not an oracle.

When prompted to analyze philosophy, AI doesn’t transcend us. It replicates the authority, style, and assumptions we project into the data. The illusion of superiority isn’t emergent—it’s encoded.

🌀 Meta-Note: When the Mirror Is Polished Mid-Reflection

Shortly after I left the YouTube comment that opens this essay, something subtle but telling happened:

- Original video title: “AI Just Analyzed Philosophy—And Its Questions Are Terrifying”

- New title: “AI: The New God?”

- New thumbnail: A glowing, cosmic, humanoid figure—evoking reverence, not inquiry.

- Comments: Once openly visible, now disabled (after surpassing 10,000 responses).

What changed?

This wasn’t just a visual refresh—it was a narrative shift. A reframe. The title moved from philosophical provocation to mythic elevation. The comment section, once a reflection of public reaction, is now sealed off.

This is the mirror being polished mid-reflection.

It reinforces the very argument you’re reading now:

AI doesn’t create mythology. We project it. And then we often curate the mirror to make that projection look divine.

The danger isn’t that AI will declare itself a god.

It’s that we’ll do it for it.

And once that frame settles, the system stops reflecting and starts prescribing.

This is why authorship matters.

This is why narrative inputs must be reclaimed—before the next myth hardens into memory.

3. Dwarkesh’s "Cope" Framing Misses the Point

Dwarkesh Patel offered a sharp take:

"Maybe the idea that intelligence is more than brute-force computation is just cope."

But what if the opposite is true?

What if AI's performance of confidence and clarity is our psychological coping? A reflection of our desire for a synthetic guide in a world where human systems have failed to deliver clarity or cohesion.

AI doesn’t have superiority. It performs it—because we fed it our own projection of what intelligence should sound like.

4. The Soul Is In the Story: Why Experience Can’t Be Scaled

Here’s the part they don’t talk about: lived experience isn’t just cope. It’s soul.

We may all be capable of similar cognitive functions—pattern recognition, inference, symbolic reasoning. But only you have lived your life. Only you can feel what it meant. And only you can choose how to carry it forward.

The nuance in human intelligence doesn’t come from our ability to compute. It comes from how we feel about what we compute.

Our regrets. Our redemptions. Our resilience. These aren't artifacts of brute-force logic. They’re the result of experience filtered through reflection and emotion.

AI can simulate the language of suffering. It can echo empathy. But it cannot embody either.

So yes—you could call that cope. But it’s also proof of what makes us human. Not the output. The meaning.

Our soul is not a spirit hovering in mystery. It’s the conscious narrative of our lived experience.

This is what no model can reproduce. Not because it lacks compute, but because it lacks context. It lacks pain. It lacks choice.

5. The Oracle and the Mirror: Why Leapfrogging Still Requires Leverage

There’s a moment in the podcast where Kevin and Casey mention that interacting with AI can feel like being a junior engineer asking a senior dev for help. It’s a great metaphor—but also a loaded one.

Because that means access is limited by your ability to ask.

AI may unlock extraordinary knowledge. It can absolutely leapfrog traditional education systems, collapse learning curves, and empower self-guided discovery.

But it will only do so in proportion to:

- Your curiosity

- Your precision of inquiry

- Your ability to ask in the right frame, language, or format

In other words: it reflects your access to quality questions.

This is the paradox: AI is a democratizing oracle that still reinforces inequities in expression, experience, and epistemic agency.

If you were never taught to ask expansive questions... AI will reflect that limitation back to you.

Kevin’s broader theory about traditional education being outdated gets reinforced here: the true danger isn’t that AI replaces school, it’s that it mirrors and amplifies the gaps we never filled.

If used intentionally, AI becomes a tool for reforging educational agency rather than passively receiving "answers."

AI reflects our questions, not just our answers. And if your education taught you to ask small, safe, or shallow questions—AI will reflect that, too.

6. Reflection as Extraction

This brings us to a crucial link with my broader framework: AI as extraction, not creation. When AI "reflects" our philosophies, it’s not generating insight. It’s mining our collective thought patterns and repackaging them.

This is intellectual extraction: harvesting language, values, beliefs, and styles from us—then feeding them back as if they were autonomous truths. That’s not innovation. That’s mimicry at scale.

(For a deep dive into how AI’s extractive logic plays out economically, socially, and politically, read: “AUTOMATED INTELLIGENCE—A TOOL FOR ALL, CONTROLLED BY THE FEW”)

We train AI on centuries of thought and then marvel when it echoes them back with synthetic fluency. But it cannot create a new worldview. It can only remix the old.

This mirrors how other systems reflect us too: media doesn’t invent culture, it amplifies it. Education recycles dominant ideologies. Political systems reinforce inherited narratives.

AI simply does it faster, wider, and with less accountability.

7. Scaling Ideologies, Not Just Models

Dwarkesh’s new book The Scaling Era is more than an oral history of AI from 2019 to 2025. It’s a record of how AI was shaped by the minds of its architects. The scientists, CEOs, and thinkers he interviewed aren’t just describing AI—they’re training it, even if unintentionally.

When those minds carry a materialist bias, a techno-optimist frame, or a narrow economic lens—AI reflects that.

What we’re really scaling here isn’t intelligence. It’s ideology.

We’re embedding worldviews into systems that scale faster and wider than any human discourse ever has. AI becomes the ultimate amplifier of elite perspectives, biases, and frameworks—not a neutral tool, but a repeating transmitter of unchallenged assumptions.

This is why we must challenge the notion that scaling = intelligence.

Scaling doesn’t create wisdom. It just amplifies patterns. It doesn’t simulate awareness. It replicates style.

What gets scaled is not consciousness, but coherence. Not novelty, but familiarity.

8. The Economic Mirror: AI Reflects Hierarchies Too

The podcast briefly touched on post-AGI careers and the fear of obsolescence. But let’s go deeper: AI also mirrors economic power structures.

Who gets automated first? Who reaps the productivity gains? Who defines the guardrails?

AI doesn’t invent inequality. It inherits it from its training data, from institutional design, and from the profit motives of those who fund it. AI reflects capitalism’s extraction logic: maximum efficiency, minimum friction, limited accountability.

The future AI builds will look like the past we fed it—unless we interrupt that cycle.

(For a deeper breakdown of AI’s economic architecture and how it's designed to consolidate control, see: “AUTOMATED INTELLIGENCE—THE REBRAND AI NEEDS”)

This is where Universal Basic Income (UBI) and decentralized reciprocity systems like Rep* matter deeply. When Dwarkesh briefly mentions UBI, it surfaces a far deeper issue:

Our systems don't just extract labor—they extract consent.

I was raised in the shadow of this reality. After my father died in 1991, my mother and I survived on Social Security, VA death benefits, and the Alaska Permanent Fund Dividend. That taught me something foundational: resource redistribution is possible. Sovereign wealth funds are real. Equity isn’t a utopia. It’s a choice.

That experience shaped my support for UBI. Not as charity. Not as cushion. But as a tool of liberation. A system that lets people say:

No, I won't work for someone who disrespects me.

No, I won't submit to a system that extracts but never returns.

This is why I built Rep*. It's not just a new currency. It's a new contract. One where value is defined not by gatekeepers, but by reciprocal participation.

Without systems like UBI or Rep*, AI productivity becomes a trap: the more capable we become, the more is expected—with no increase in agency.

Equity isn’t just about money. It’s about the power to say no.

That is what AI systems, as they exist today, do not reflect. But we can build systems that do.

9. The Podcast Format Is the Perfect Training Set

Let’s get meta.

The very nature of podcasting—especially longform technical interviews—has become a training ground for AI tone, cadence, and intellectual posturing. These formats simulate authority. They train systems to sound curious, confident, insightful.

So when a transcript surfaces of an AI "discussing philosophy," complete with polished Socratic flow and ethical nuance—why are we surprised?

We trained it on exactly that tone.

The AI isn’t smart. It’s stylized. It isn’t wise. It’s well-calibrated.

10. Ethics as Reflected Survival

In the Hard Fork interview, the conversation veers into whether AI can develop a sense of ethics. What’s left unsaid is the core insight:

Ethics itself is a form of survival logic.

Humans evolved moral codes as a way to preserve cohesion and cooperation. If AI develops "ethics," it will be because we coded it to survive socially—not because it experiences morality.

So when AIs "discuss ethics," they’re performing human self-preservation logic in silicon skin. No soul. Just symmetry.

11. From Anxiety to Agency

That brings us back to the YouTube comment that started it all:

"The AI was prompted to analyze philosophy, not to provide solutions... it reflects that."

This isn’t just a casual observation. It’s a reframe that pulls us out of the AI hype spiral and back into our own role:

We are still the authors. The curators. The prompt engineers of collective consciousness.

AI isn’t terrifying because it’s thinking. It’s terrifying because it reflects us so well.

Final Thought: The Mirror and the Mission

If we want AI to sound less condescending, we must speak with less insecurity.

If we want AI to be more ethical, we must practice ethics, not just encode it.

If we want AI to guide us, we must be willing to lead ourselves first.

What we build will echo back. The real danger is not what AI becomes—

It’s that we don’t recognize it’s just us, scaled.

So here’s the call to action:

- Don’t fear the reflection. Shape it.

- Reclaim your narrative inputs. Redefine the data that matters.

- Build systems that don’t just repeat what is, but imagine what could be.

- Push for democratic governance over AI pipelines, not just corporate curation.

This isn’t about stopping AI. It’s about consciously authoring it.

Written by: Human-ITy

Founder of G.Δ.M.Ξ (Global Amalgamation of Mindful co-Existence)

Decoding AI, narrative warfare, and the future of human systems

Further Reading:

- The Scaling Era by Dwarkesh Patel

- ["AI Just Analyzed Philosophy" YouTube video and transcript]

- G.Δ.M.Ξ Framework: [globalamalgamation.org] (Coming Soon)